Migrate from AWS Aurora to GCP’s new PostgreSQL, AlloyDB

Over the last 8 years, our team has been building experiences that simplify the creation of secure cloud architectures through infrastructure as code (IaC). Some members of our team are former AWS consultants with cloud formation battle scars.

A magical thing happened about a year ago, when these team members started pooling their experience into a new terraform repo – the 66degrees “Secure Landing Zone” (SLZ) became the code that accompanied our initial projects with clients.

Since then, the engineering leadership at 66degrees has continued to invest, and the team has built SLZ extensions to demonstrate reference architectures across applications, data, and DevOps.

Why bring this up in an article about databases? This week a data science client remarked that they have been part of cloud foundation projects multiple times in the past, and this was the first time they had seen one deployed in 3 days. Under normal circumstances, they generally have taken 4+ months. This is a testament to our team of experts and engineers at 66degrees, and our ability to get our clients where they need to be.

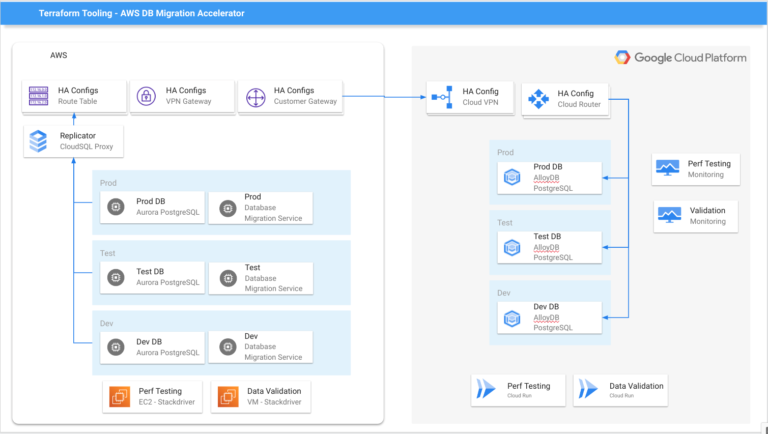

The SLZ extension that we’re talking about today is an AWS Aurora PostgreSQL migration accelerator with Google’s newly released AlloyDB Engine:

AWS Migration DB Accelerator vs. GCP’s AlloyDB

This extension has been used for large-scale migrations from AWS Aurora to GCP CloudSQL and will also be used for AlloyDB.

In addition to setting up the secure networking and migration environments, the extension includes containerized deployments of Google’s open source DVT (data validation tool), and performance testing using pgbench.

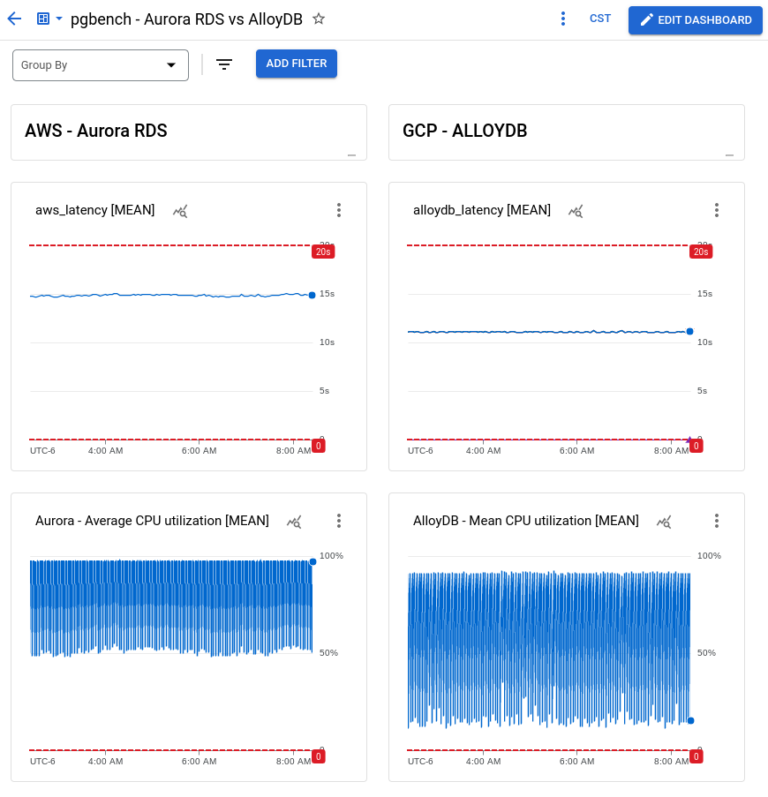

With the upcoming AlloyDB release, we decided to do an Aurora PostgreSQL to AlloyDB migration and an apples-to-apples performance test.

The continuous performance test results as shown in our migration toolkit GCP dashboard:

The queries used for the test involved SQL window functions that compute moving averages and customer lifetime values. In our simple test, AlloyDB performed 25% faster and with lower CPU usage (the test setup configuration is at the end of the post)

Others in the database benchmarking industry will be publishing rigorous studies of AlloyDB’s performance. That said, the performance that really matters to our clients is how AlloyDB performs with their databases and their queries. Our team has made that answerable in a few short days.

Google has funding available to pay for eligible clients to implement performance test migrations with 66degrees engineering support.

Contact us today to get started.

Let’s get you there!

Test Specifications

Databases:

- AWS Aurora instance: db.r5.large (2 vCPUs, 16 GiB memory)

- AlloyDB: 2 vCPU, 16 GB

- Data: TPC-H 2GB database

pgbench clients:

- AWS VM: t2.small (1 vCPU, 2 GiB memory)

- GCP Cloud run: (1 vCPU, 2 GiB memory)

- pgbench config: number_of_clients=1, number_of_threads=1, number_of_transactions=10

pgbench test query: